The fore-runner of brain communication to a robot is Neuroprosthetics, which is an area of neuroscience concerned with neural prostheses. That is, using artificial devices to replace the function of impaired nervous systems and brain related problems.

Len Calderone for

We see many injuries that incapacitate people through accidents, including construction and factory workers, as well as soldiers from combat. Many of these injuries result in the loss of a limb, or the patient has become a paraplegic. Research technology has advanced to the point where the brain can communicate with a prosthetic limb to make it act in place of the lost limb.

There are several names for the communication interface, such as BCI (brain-computer interface), MMI (mind-machine interface, DNI (direct neutral interface), STI (synthetic telepathy interface) and BMI (brain-machine interface). No matter what they are called, the devices allow a person’s brain to control a robotic device.

The fore-runner of brain communication to a robot is Neuroprosthetics, which is an area of neuroscience concerned with neural prostheses. That is, using artificial devices to replace the function of impaired nervous systems and brain related problems. Two neuroprosthetic devices are the cochlear implant to restore hearing and a retinal implant to restore vision.

Neuroprosthetics are used to connect the nervous system to a device, whereas BCIs usually connect the brain with a computer system. Neuroprosthetics can be linked to any part of the nervous system while BCI’s interface with the central nervous system.

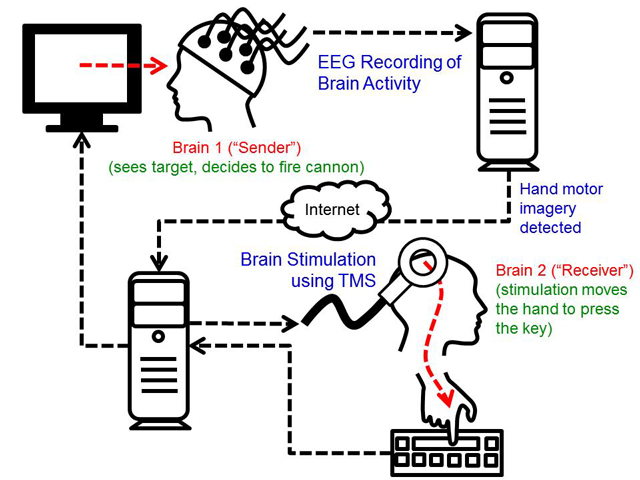

One type of communication method is an electroencephalographic (EEG) BCI. The EEG based BCI allows a person to communicate using a computer, as the system is independent of neuromuscular activity. By turning EEG (brain wave) signals detected from the scalp into an electrical instruction, the BCI allows people to make selections from a computer screen.

Presently, there are two BCI methods. The first is the use of a helmet, which relies on algorithms to analyze levels and peaks of brain activity, called electroencephalographic activity, in response to different mental processes. The computer system that reads the brain activity is adaptive and, according to its developers, gets quicker and more efficient over time.

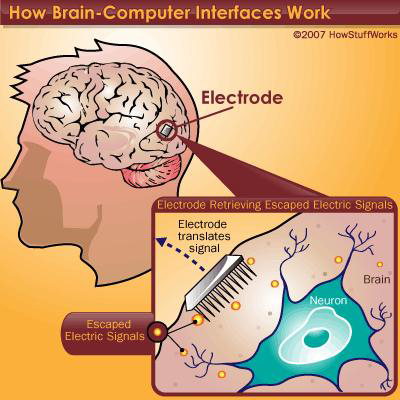

The second method is implanting electrodes directly in the brain to animate a robotic device. The surgery involves opening up the skull and placing a sheet of electrodes on the surface of the brain. In the future a wireless transmitter would be added, making the implant virtually undetectable to anyone, but the patient; and the procedure could almost be done on an outpatient basis, being less invasive than a breast augmentation.

A BCI works because of the way our brains function. Our brains are filled with neurons, which are individual nerve cells connected to one another by dendrites and axons. Our neurons work by sending small electrical signals that zip from neuron to neuron as fast as 250 mph. The signals are generated by differences in electric potential carried by ions on the membrane of each neuron.

BrainGate™, a wireless BCI system, is being developed at the Brown University research group by working on using a robotic arm controlled by a tethered BCI, allowing paralyzed patients to feed themselves. Using the language of neurons, a neural interface uses a sensor that is implanted on the motor cortex of the brain and a device that analyzes brain signals. The signals then can be interpreted and translated into cursor movements to control a computer with thought, just as individuals who have the ability to move their hands use a mouse.

Along with medical advances, BCI could help factory workers perform advanced manufacturing tasks. The University of Buffalo research relies on a relatively inexpensive, non-invasive external device to read EEG brain activity with 14 sensors and transmit the signal wirelessly to a computer, which then sends signals to the robot to control its movements.

Robots could be used by factory workers to perform hands-free assembly of products, or carry out tasks like drilling or welding; reducing the boredom of performing repetitious tasks and improves worker safety and productivity.

How exciting would it be to watch an imagined action from one brain get translated into actual action by another brain? Imagine sitting at a desk, looking at your computer, playing a game in one building, while another person sits at his desk in another building, facing a computer displaying the same game. You are wearing a cap with wires coming out of it. Without moving a muscle, or using a communication device, you tell your colleague to fire a gun on the screen at just the right moment. You only have the power of your mind, so, at the right moment, you imagine firing the gun. Your thought sends a signal via the internet to your associate, who, wearing noise-cancelling earphones involuntarily moves his right index finger to push the space bar, firing the gun

.

A number of groups worldwide are attempting to create thought-controlled robots for various applications. Honda demonstrated how their robot Asimo could lift an arm or a leg through signals sent wirelessly from a system operated by a user with an EEG cap, while scientists at the University of Zaragoza in Spain are working on creating robotic wheelchairs that can be manipulated by thought.

Puzzlebox Orbit is a flying orb whose propellers are controlled by brain activity. The Puzzlebox Orbit is operated via a NeuroSky EEG headset plus either a mobile device or the dedicated Puzzlebox Pyramid remote. Users can fly the Orbit through focused concentration or by maintaining a state of mental relaxation. It's controlled through an Android or iOS phone so you don't need a full computer attached to the flying device.

A robot prototype, Mitra, is a two foot tall humanoid that can walk, look for familiar objects and pick up or drop off objects. A BCI is being developed that can be used to train Mitra to walk to different locations within a room. Using an EEG cap, a person can teach the robot a new skill or execute a command through a menu. In the "teaching" mode, machine learning algorithms are used to map the sensor readings the robot gets to appropriate commands. If the robot is successful in learning the new behavior then the user can ask the system to store it as their latest a new high-level command that will appear on the list of available choices the next time.

We will see advancements for the disabled, giving them the ability to become mobile by using the power of their minds to control wheelchairs and other devices that could restore lost mobility. Musicians might be able to create music directly through their thoughts. How about using a brainwave reading headset to monitor brain activity and reroute cell phone calls to voicemail when the headset perceives that the user's brain is busy with other tasks. In some cases, human machine interfaces are becoming part of the human body. One new prosthetic even provides a sense of "touch" like that of a natural arm, because it interfaces with the wearer's neural system by splicing to residual nerves in the partial limb. The prosthetic sends sensory signals to the wearer's brain that produce a lifelike "feel," allowing users to operate the limb by touch rather than by sight alone.

Where will mind control take us? Will we all have transplants in our brain, which will allow us to connect to computers, robots, machines and other people just by thinking? Will we have the power that Gabriel Vaughn in the TV show, Intelligence, has as a high-tech intelligence operative enhanced with a super-computer microchip in his brain. With this implant, Gabriel is the first human ever to be connected directly into the globalized information grid. He can get into any of its data centers and access key intel files in the fight to protect the United States from its enemies. What will we be able to do?

For further information:

- http://www.interchangelab.com/Peer_review_MMIP_research_paper4.pdf

- http://infoscience.epfl.ch/record/181698/files/CarlsonMiRAM13.pdf

-

White Paper - BRAIN-COMPUTER INTERFACE TECHNOLOGY

FOR STROKE REHABILITATION - WhitePaper - ECoG based Brain-Computer Interface Research

- http://www.sprweb.org/articles/birbaumer06.pdf

Len has contributed articles to several publications. He also writes opinion editorials for a local newspaper. He is now retired.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Featured Product