Focuses on Unmanned Automotive Technologies at RoboBusiness

Optical Time-of-Flight Sensing Technology

Thomas R. Cutler for | LeddarTech

LeddarTech, maker of the patented, leading-edge Leddar sensing technology, will be exhibiting with AutonomouStuff at the upcoming RoboBusiness show from September 23-24 in San Jose Convention Center, CA. LeddarTech and AutonomouStuff will share Booth #301. The focus of the exhibit is sensors for automated vehicles/robots including collision avoidance, unmanned guidance, assisted driving, and machine safety.

AutonomouStuff is the world’s leader in supplying specialized product solutions and services related to autonomous driving, robotics, terrain mapping, collision avoidance, object tracking, intersection safety, and a variety of industrial applications.

LeddarTech is the owner and provider of the patented, leading-edge Leddar sensing technology which enables advanced detection and ranging systems. Leddar performs time-of-flight measurements using light pulses processed through innovative algorithms, detecting a wide range of objects under various environmental conditions.

Leddar sensing technology is globally deployed in all types of devices and applications, from specialized industrial solutions to high volume Internet of Things (IoT) sensing applications. Already growing at 200% CAGR over the last few years, the sensor market is expected to reach more than a trillion dollars in 2020.

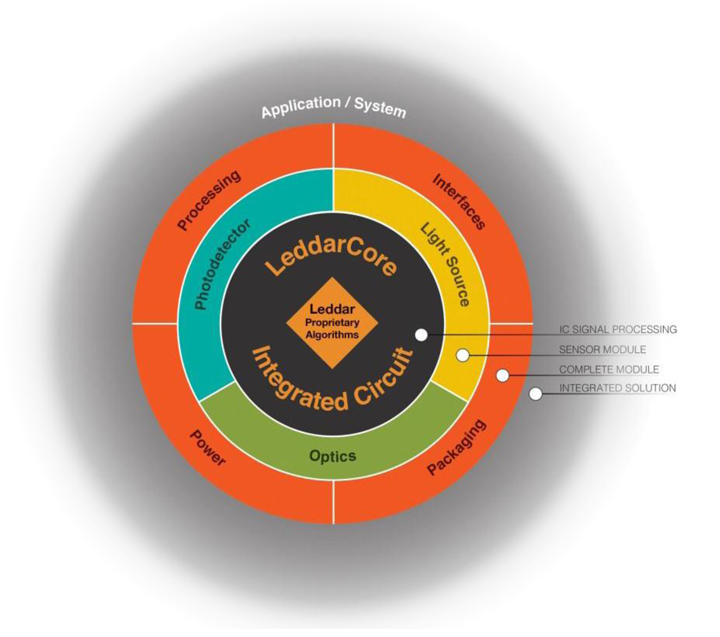

The highly adaptable Leddar technology serves multiple industry sectors in different formats (from sensor ICs to off-the-shelf sensor modules), providing brand owners and OEMs with a best-of-class sensing solution optimized for their application and ensuring quick and simple integration.

LeddarTech’s technology is accessible to all interested in exploring the new frontiers of innovation through sensors, from maker communities experimenting with sensor modules to specialized industrial leaders and large consumer goods manufacturers working on new products or applications.

Pierre Olivier, Vice President, Engineering and Manufacturing, LeddarTech Inc., shared that remote sensing consists of acquiring information about a specific object in the vicinity of a sensor without making physical contact with the object. Countless applications, including automotive driver assistance, robot guidance, traffic management, and level sensing, exist for this technique.

Multiple technology options are available for remote sensing:

-

Presence or proximity detection, where the absence or presence of an object in a general area is the only information that is required (e.g., for security applications). This is the simplest form of remote sensing.

-

Speed measurement, where the exact position of an object does not need to be known but where its accurate speed is required (for law enforcement applications).

-

Detection and ranging, where the position of an object relative to the sensor needs to be precisely and accurately determined.

At Robobusiness there will be a concentration on technologies capable of providing detection and ranging functionality, as it is the most complex of the three applications. From the position information, presence and speed can be retrieved so technologies capable of detection and ranging can be universally applied to all remote sensing applications.

Although it is possible to obtain distance information with passive technologies, such as stereo triangulation of camera images, these passive technologies are usually very constrained in capability. Stereo triangulation requires well-defined edges for the matching algorithms to work. The most commonly used technologies for measuring the position of an object involve sending energy towards the object to be measured, collecting the echo signal, and analyzing this echo signal to determine the position of one or several objects located in the sensor’s field of view. Since energy is intentionally emitted towards the object to be measured, LeddarTech refers to these technologies as being “active.”

Some technologies rely on the geometric location of the return echo to infer position information. For instance, structured lighting involves projecting an array of dots towards the object to be measured, and analyzing the geometric dispersion of the dots on the object using a camera and image analysis.

Other technologies rely on the time characteristic of the return echo to determine the position of the object to be measured. These are generally known as “time-of-flight measurement” technologies.

Although the implementation differs, time-of-flight measurement can be accomplished with radio waves (radar), sound or ultrasonic waves (sonar), or light waves (lidar). Optical time-of-flight measurement computes the distance to a target from the round-trip time-of-flight between a sensor and an object. Since the speed of light in air changes very little over normal temperature and pressure extremes, and its order of magnitude is faster than the speed of objects to be measured, optical time-of-flight measurement is one of the most reliable ways to accurately measure distance to objects in a contactless fashion.

About Thomas R. Cutler

Thomas R. Cutler is the President & CEO of Fort Lauderdale, Florida-based, TR Cutler, Inc., (www.trcutlerinc.com) Cutler is the founder of the Manufacturing Media Consortium including more than 5000 journalists, editors, and economists writing about trends in manufacturing, industry, material handling, and process improvement. Cutler authors more than 500 feature articles annually regarding the manufacturing sector. Cutler is the most published freelance industrial journalist worldwide and can be contacted at trcutler@trcutlerinc.com and can be followed on Twitter @ThomasRCutler.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Featured Product