Embedded vision solution with USB 3.0 board-level camera.

Contributed by | IDS Imaging Development Systems

Besides 3D and robot vision, embedded vision is one of the latest hot topics in machine vision. This is because without real-time machine vision, there would be no such thing as autonomous transport systems and robots in the Industry 4.0 environment, self-driving cars, or unmanned drones. Classic machine vision systems, however, do not enter the equation due to space or cost limitations, while the functionality of so-called smart cameras is usually very limited. Only by combining a miniaturized camera, a compact processor board, and flexibly programmable software can tailor-made machine vision applications be developed and embedded directly in machines or vehicles.

Application

One such custom application is a multicopter, which has been built at the Braunschweig University of Technology for mapping disaster zones in real time. The maps are created by an embedded vision system mounted on board, which consists of an ARM-based single-board computer and an IDS USB 3.0 board-level camera.

How can a drone help in a disaster situation? Drones can be put to a variety of uses, such as taking water samples, casting down life belts, or providing geodata and images from areas that are difficult or even impossible for people to access. For the latter task, the AKAMAV team, a working group comprising students and employees from the Braunschweig University of Technology, which is supported by the Institute of Flight Guidance (IFF) at TU Braunschweig, has constructed a specially-equipped multicopter.

Multicopter with IDS USB 3.0 industrial camera and ARMv7 single-board computer

This micro air vehicle (MAV) flies over disaster zones – these may be earthquake zones or flooded cities, or even burning factories – and delivers maps in real time, which the rescue teams can use immediately to help them with their mission planning. The multicopter operates autonomously. Its area of operation is defined using an existing georeferenced satellite image. A waypoint list is generated from this automatically depending on the size of the area and the required ground resolution. The multicopter then flies over this using GNSS technology (Global Navigation Satellite System) or GPS. The multicopter also takes off and lands automatically.

The real-time requirements exclude remote sensing methods, such as photogrammetry, which are currently available on the market. These methods do not deliver results until all images have been captured and combined based on algorithms that usually require considerable processor power. Although the maps created using this method are extremely precise, precision is not a top priority for gaining an initial overview in a disaster situation and only delays the rescue operations unduly. In contrast, the solution designed by AKAMAV is based on the principle of image mosaicing, or stitching, which is a proven method of piecing together a large overall image from many individual images extremely quickly. To put this principle into practice on board a multicopter, however, the images provided by a camera must be processed by a computer quickly and nearby.

The "classic" vision system consists of an industrial camera that is connected to a desktop PC or box PC via USB, GigE, or FireWire; the actual image processing is done on the computer by appropriate machine vision software and other components are managed within the application, if applicable. A configuration of this type needs space, is comparatively expensive, and provides a great deal of functionality that is ultimately unnecessary. Embedded vision systems that are based on single-board computers and used in conjunction with open-source operating systems can be extremely compact, yet can be programmed flexibly, and are usually inexpensive to implement. Board-level cameras with a USB interface can be teamed up perfectly with ARM-based single-board computers. These provide sufficient processing power, consume little power, are available in various form factors, most of which are extremely small, and can be purchased for less than 100 euros. Take, for instance, the famous Raspberry Pi.

AKAMAV relies on the tiny, but extremely powerful ODROID-XU4 board, which measures just 83 x 60 mm, has an 8-core ARM CPU and runs on Linux. However, the single-board computer has all the essential interfaces (including, for instance, GigE, USB 2.0, and USB 3.0) and is connected to the aircraft system's autopilot via a USB interface.

The computer not only receives status information via this data link, but also receives information on the current position in latitude and longitude, the barometric altitude, and the height above the reference ellipsoid.

Camera

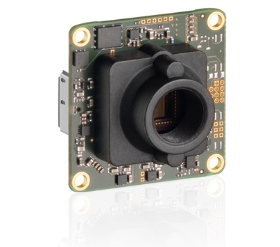

Images are captured by a board camera from the IDS USB 3 uEye LE series. The board-level version of the industrial camera with a USB 3.0 connector measures just 36 x 36 mm, yet offers all essential features. It is available with the latest generation of ON Semiconductor and e2v CMOS sensors and with resolutions of up to 18 megapixels. An 8-pin connector with a 5 V power supply, trigger and flash, 2 GPIOs, and an I2C bus for controlling the periphery ensure almost unlimited connectivity and high flexibility.

USB 3.0 camera 3251LE with 2 MPixel CMOS-Sensor and S-mount lens holder

The AKAMAV team mounted the UI-3251LE-C-HQ model with a 2 megapixel CMOS sensor and an S-mount lens holder on the multicopter, because, as Alexander Kern, student member of the AKAMAV team points out, the camera resolution is of secondary importance in this case. "Processing is carried out using the original image data, which may even be reduced in size to ensure the required performance of the overall algorithm. As soon as an image is captured by the camera and forwarded to the single-board computer, the system searches the image for landmarks, referred to as features, and extracts these. The system also scours the next image for features and matches them with those from the previous image. From the corresponding pairs of points, the system can then determine how the images were taken relative to one another and can quickly add each new image to a complete map accordingly.“

For an area of one hectare, the multicopter requires approximately four to five minutes if it starts and lands directly at the edge of the area to be mapped. At a flight altitude of around 40 m and a scaled image resolution of 1200 x 800 pixels, the ground resolution is approximately 7 cm/pixel on average. The embedded vision system is designed for a maximum airspeed of 5 m/s of the multicopter. Accordingly, a comparatively low image capture rate of 1-2 fps is sufficient and there is also no need to buffer the image data.

If there is a radio connection between the aircraft and the control station, the stitching process can be tracked live from the ground. Once the mapping process is finished, the complete map can be accessed either remotely if the multicopter is within radio range, or it can be copied to an external data storage medium and distributed once the multicopter has landed.

Software

The machine vision technology was implemented by the AKAMAV team using the open source computer vision library OpenCV and C++. "As the issue here is the real-time requirement, the software must be as powerful as possible, which is why only one high-level language is worth considering. OpenCV has become the standard for machine vision over the past few years in the research field and includes all kinds of impressive features for image analysis or machine vision", argues Mario Gäbel from the AKAMAV student working group.

The camera is integrated using the uEye API. The API plays a key role in all vision applications. This is because it decides not only how easy it is to use the camera's functionality, but also how well the camera's potential can be exploited. IDS offers one major advantage in this respect with its own "standard" from which developers of embedded vision applications in particular also reap enormous benefits: After all, it does not matter which of the manufacturer's cameras is used, or which interface technology (USB 2.0, USB 3.0, or GigE) is required, or whether a desktop or embedded platform is used – the uEye API is always the same.

This not only facilitates the interchangeability of the camera or platform, but also allows developers to work on projects on a desktop PC and later use these projects 1:1 on the embedded computer without having to make time-consuming adjustments to the code. The process both for integrating the camera and adapting to third-party machine vision software, such as Halcon, is exactly the same on the embedded platform as in the desktop environment.

Special tools, such as the uEye Cockpit, which is also part of IDS's own software development kit, also reduce the amount of programming effort. Based on the motto "Configuring instead of programming", the camera can be preconfigured on the desktop PC in just a few clicks. The configuration can be saved and easily loaded into the embedded vision application later. In the embedded sector, in particular, programming by cross-compiling is usually extremely time-consuming, and often no options are available for configuring the connected camera on the device itself due to the lack of display and keyboard ports. Especially in a situation like this, the option to preconfigure the camera settings in the uEye Cockpit is worth its weight in gold.

Outlook

In the near future, micro air vehicles such as the AKAMAV multicopter will play an increasingly important role in many sectors. Whether in the field of measurement data acquisition, disaster relief, traffic surveillance, or for monitoring extensive infrastructure networks such as gas pipelines, embedded vision solutions can reach places that are difficult or even impossible for people to access: www.akamav.de

USB 3 uEye LE - The perfect component for embedded systems

IDS industrial camera USB 3 uEye LE Boardlevel

- Interface: USB 3.0

- Name: UI-3251LE

- Sensor type: CMOS

- Manufacturer: e2v

- Frame rate: 60 fps

- Resolution: 1600 x 1200 px

- Shutter: Global Start Shutter, Rolling Shutter, Global Shutter

- Optical class: 1/1.8”

- Dimensons: 36 x 36 x 20 mm

- Mass: 12 g

- Connector: USB 3.0 Micro-B

- Applications: Apparatus engineering, Medical technology, Embbedded Systems

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

IDS Imaging Development Systems Inc.

World-class image processing and industrial cameras "Made in Germany". Machine vision systems from IDS are powerful and easy to use. IDS is a leading provider of area scan cameras with USB and GigE interfaces, 3D industrial cameras and industrial cameras with artificial intelligence. Industrial monitoring cameras with streaming and event recording complete the portfolio. One of IDS's key strengths is customized solutions. An experienced project team of hardware and software developers makes almost anything technically possible to meet individual specifications - from custom design and PCB electronics to specific connector configurations. Whether in an industrial or non-industrial setting: IDS cameras and sensors assist companies worldwide in optimizing processes, ensuring quality, driving research, conserving raw materials, and serving people. They provide reliability, efficiency and flexibility for your application.

Other Articles

High-precision process monitoring and error detection in additive manufacturing

Inspection of critical infrastructure using intelligent drones

Multi-camera system with AI and seamless traceability leaves no chance for product defects

More about IDS Imaging Development Systems Inc.

Featured Product