Our newest hands-on guidebook describes how you can optimize the benefits of Time of Flight by considering various factors such as the environment of the application and the target object properties in the scene.

Time of Flight Forges Ahead: Design Tips to Boost 3D Performance and Cut Integration Time & Cost

Time of Flight Forges Ahead: Design Tips to Boost 3D Performance and Cut Integration Time & Cost

White Paper from | LUCID Vision Labs

Have you wondered how to boost the performance of your 3D Time of Flight imaging application and at the same time cut your integration time and cost? Our newest hands-on guidebook describes how you can optimize the benefits of Time of Flight by considering various factors such as the environment of the application and the target object properties in the scene.

• Outdoors vs Controlled Environments

• Target & Camera Motion

• Specular, Diffuse & Transparent Targets

• Scene Complexity Simplified

• Working Distance Considerations

• Put It All Together

Sample Chapter 3: "Specular and Diffuse Targets"

Targets with diffuse surfaces and high reflectivity work best for ToF (Image Set 4, Example a, b, d). These targets send enough light back to the ToF sensor without specular reflections. Some objects however, exhibit properties that are less than ideal but are still discernible within the scene. In these situations it is possible to increase target details via changes in exposure time and gain, image accumulation, and filtering.

EXPOSURE TIME AND GAIN

Determining the best exposure time maximizes usable depth data for both high and low reflectivity targets. The Helios camera enables two discrete exposure time settings — 1,000µs and 250µs, as well as high and normal gain settings. The 1,000µs setting is the default exposure time and also the maximum exposure time allowed. Longer exposure time and high gain should be used for scenes further from the camera, or when imaging objects with low reflectivity. Shorter exposure time and normal gain is used for scenes closer to the camera, or objects that appear over saturated.

| EXPOSURE TIME | GAIN | BEST FOR… |

| 1,000µs | High | Dark objects, farther distances |

| 250µs | Normal | Highly reflective objects, closer distances |

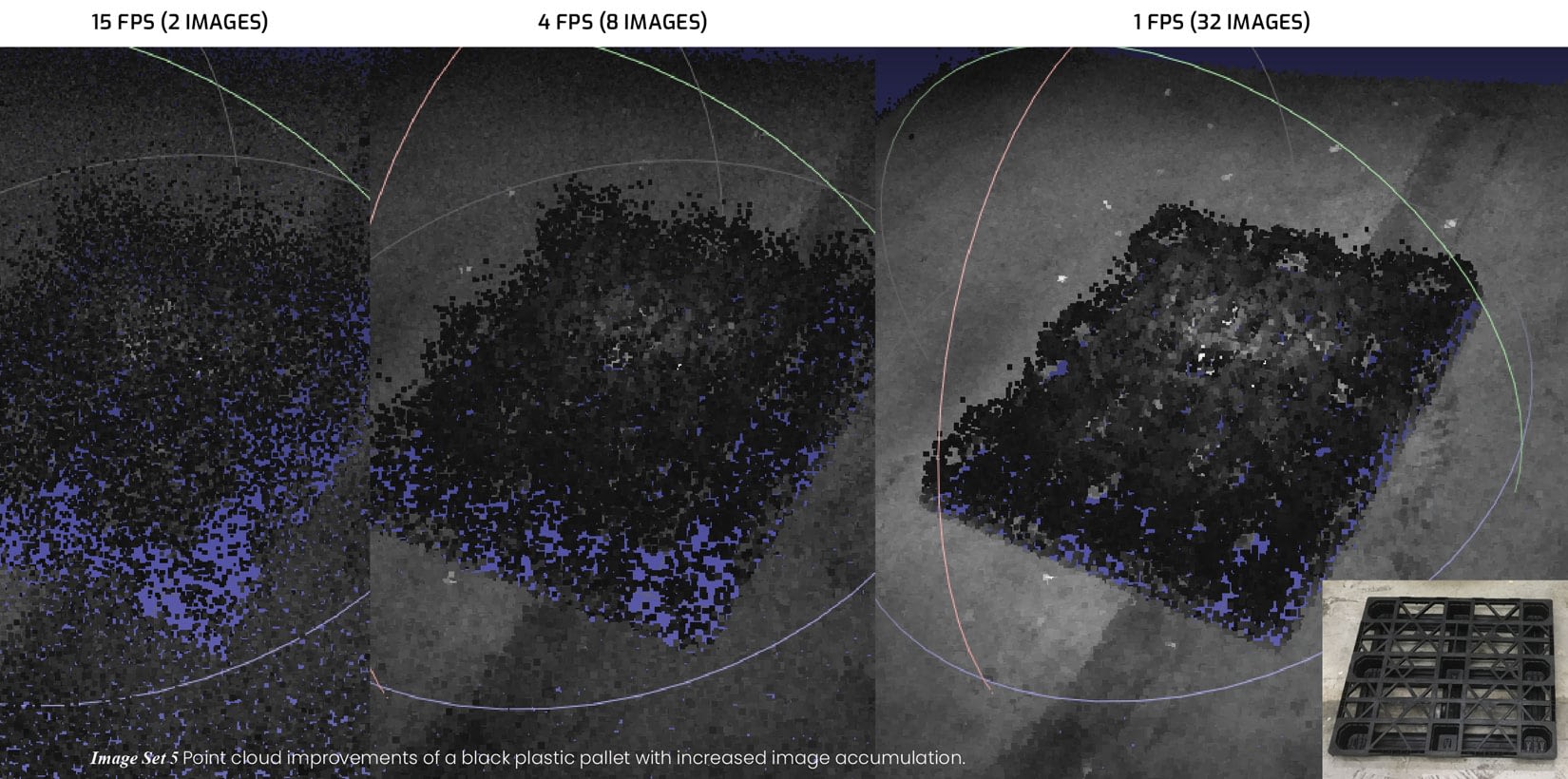

IMAGE ACCUMULATION

The Helios processing pipeline is capable of accumulating multiple frames for improved depth calculations. This is helpful for targets that produce noisy data. With image accumulation, depth frames are averaged over a set number of frames, improving imaging results. It should be noted that the higher the number of frames accumulated, the slower the depth data generation, as more images must be captured to calculate the data.

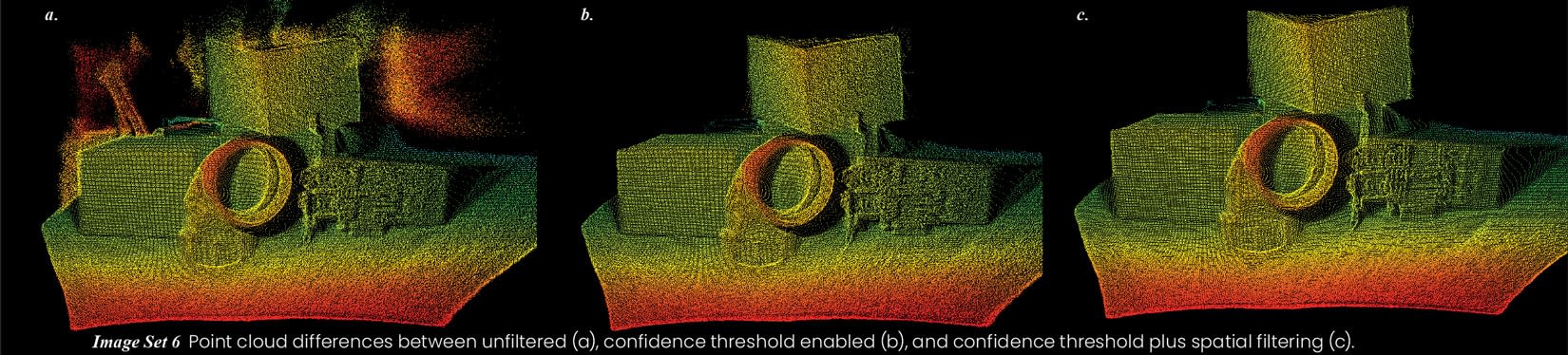

CONFIDENCE THRESHOLD

Depth data confidence is based on a level of intensity for each point. If a returning signal is too weak, there is low confidence in the resulting depth data. Thresholding removes depth data with low intensity/confidence, improving scene clarity.

SPATIAL FILTERING

Spatial filtering reduces noise by adjusting and averaging depth data differences between neighboring pixels — smoothing out surfaces. Additionally, the Helios camera also uses edge-preservation in its spatial filtering, reducing noise in surfaces while maintaining object-edge sharpness.

Click here to download the full Time of Flight Guidebook PDF

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Featured Product