Autonomous Solutions, Inc. (ASI) improves algorithm to detect drop-offs and other large negative obstacles

ASI has improved an algorithm for autonomous vehicles to accurately detect negative obstacles in off-road environments.

Autonomous Solutions, Inc. (ASI) has improved an algorithm for autonomous vehicles to detect drop-offs and other large negative obstacles often found in the environments in which automated off-road vehicles operate.

"ASI has developed a method for mapping point cloud occlusions in real-time," said Taylor Bybee, Perception Tech Lead at ASI. "Which provides additional accuracy and safety when integrated into an autonomous vehicle obstacle detection and avoidance system."

For safe navigation through an environment, autonomous ground vehicles rely on sensor data representing 3D space surrounding the vehicle. Often this data is obscured by objects or terrain, producing gaps in the sensor field of view. These gaps, or occlusions, can indicate the presence of obstacles, negative obstacles, or rough terrain.

Occlusions can be defined as a blockage which prevents a sensor from gathering data in a location. For example, occlusions can be seen as shadows in LiDAR data.

Because sensors receive no data in these occlusions, sensor data provides no explicit information about what might be found in the occluded areas. Information about the occlusions must be inferred from using an occlusion mapping algorithm to provide the navigation system with a more complete model of the environment.

"While sensor data itself doesn't tell us what's in the occluded areas, occlusions can represent negative obstacles like drop-offs or areas behind large obstacles," said Jeff Ferrin, CTO at ASI. "It's important to identify these areas for obstacle detection and avoidance to work properly."

Application of this new technology can be useful in settings with dump edges at mine sites, steep road edges, canals, ditches, hills or stairs for indoor or urban environments.

The occlusion mapping algorithm has three main components.

The first is a sensor field of view (FOV) model that describes what obstacles the sensors are expected to detect. This component is designed for point cloud sensors such as 3D LiDAR, Flash LiDAR, Structured Light, and Stereo Cameras.

Second, an occlusion map is maintained and updated using the sensor FOV model and current sensor data to provide a probabilistic estimate on areas that have not been detected within the sensor FOV.

The third component is the integration of the occlusion map into an autonomous vehicle navigation system. It is designed to work with and complement existing obstacle detection and avoidance systems.

About ASI

Autonomous Solutions, Inc. (ASI) is a world leader in industrial vehicle automation. ASI serves clients across the world in the mining, agriculture, automotive, government, and manufacturing industries with remote control, teleoperation, and fully automated solutions from its headquarters and 100-acre proving ground in northern Utah.

Featured Product

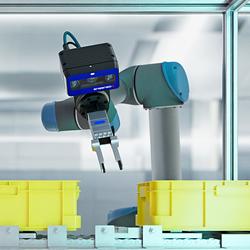

3D Vision: Ensenso B now also available as a mono version!

This compact 3D camera series combines a very short working distance, a large field of view and a high depth of field - perfect for bin picking applications. With its ability to capture multiple objects over a large area, it can help robots empty containers more efficiently. Now available from IDS Imaging Development Systems. In the color version of the Ensenso B, the stereo system is equipped with two RGB image sensors. This saves additional sensors and reduces installation space and hardware costs. Now, you can also choose your model to be equipped with two 5 MP mono sensors, achieving impressively high spatial precision. With enhanced sharpness and accuracy, you can tackle applications where absolute precision is essential. The great strength of the Ensenso B lies in the very precise detection of objects at close range. It offers a wide field of view and an impressively high depth of field. This means that the area in which an object is in focus is unusually large. At a distance of 30 centimetres between the camera and the object, the Z-accuracy is approx. 0.1 millimetres. The maximum working distance is 2 meters. This 3D camera series complies with protection class IP65/67 and is ideal for use in industrial environments.