Teaching Robots to ‘Feel with Their Eyes'

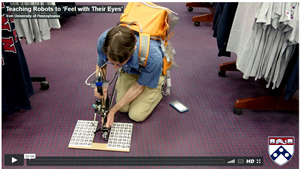

Penn’s School of Engineering and Applied Science: At first glance, Alex Burka, a Ph.D. student in Penn’s School of Engineering and Applied Science, looks like a ghostbuster. He walks into the Penn Bookstore strapped to a bulky orange backpack, holding a long, narrow instrument with various sensors attached.

Penn’s School of Engineering and Applied Science: At first glance, Alex Burka, a Ph.D. student in Penn’s School of Engineering and Applied Science, looks like a ghostbuster. He walks into the Penn Bookstore strapped to a bulky orange backpack, holding a long, narrow instrument with various sensors attached.

The device looks exactly like the powerful nuclear accelerator backpack and particle thrower attachment used in the movies to attack and contain ghosts. For this reason, Burka and the other people who work with him jokingly refer to it as a “Proton Pack.”

But Burka is not in the business of hunting ghosts. Instead, he’s leading a project designed to enable robots to “feel with their eyes.” Using this Proton Pack, Burka hopes to build up a database of one thousand surfaces to help coach robots on how to identify objects and also to know what they’re made of and how best to handle them. The project is funded by the National Science Foundation as part of the National Robotics Initiative.

“We want to give robots common sense to be able to interact with the world like humans can,” Burka says. “To get that understanding for machines, we want a large dataset of materials and what they look and feel like. Then we can use some technologies emerging in AI, like deep learning, to take all that data and distill it down to models of the surface properties.” Full Article:

Featured Product