Advancements in machine vision drive industrial automation and push the boundaries of what is feasible. Yet one limitation of machine vision could long not be overcome, which seriously restrained the range of tasks that could be automated. This changes now.

Machine Vision of Tomorrow: How 3D Scanning in Motion Revolutionizes Automation

Andrea Pufflerova, PR Specialist | Photoneo

The market offers a number of different machine vision technologies and it may seem difficult to choose the right technology for a specific application. Each 3D sensing approach has its advantages as well as weak points and there might be no optimal solution that would satisfy all needs.

While each technology is suitable for different applications, high parameters of one or another method always come with certain trade-offs. Yet there is one major limitation that all 3D sensing approaches have been struggling with and none of them has been able to overcome it - until a novel technology has been introduced.

Before discussing this challenge in more detail, let’s have a look first at a brief overview of machine vision technologies that are available on the market.

2D machine vision systems provide a flat, two-dimensional image, which is perfectly sufficient for simple applications such as barcode reading, character recognition, or dimension checking. However, they cannot recognize object shapes or measure distance in the Z dimension.

More advanced machine vision systems are based on 3D sensing technologies, which provide a three-dimensional point cloud of precise X, Y, and Z coordinates. These methods are suitable for a wide range of robot applications such as robot guidance, bin picking, dimensioning, quality control, detection of surface defects, and an infinite number of other tasks.

3D sensing technologies can be classified into two main groups - Time-of-Flight (ToF) methods and triangulation-based methods. ToF technologies measure the time during which a light signal emitted from a light source hits the scanned object and returns back to the sensor. Triangulation-based methods are based on observing a scene from multiple perspectives and measuring the angles of the triangles spanned between the scene and the observers, from which the exact 3D coordinates can be computed.

Here is an overview of major 3D sensing technologies:

● Time-of-flight methods

-

ToF area scan

-

LiDAR

● Triangulation-based methods

-

Laser triangulation (or profilometry)

-

Photogrammetry

-

Stereo vision (passive and active)

-

Structured light (single frame, multiple frames)

-

Novel Parallel Structured Light

The common limitation of standard 3D sensing technologies

The great challenge of 3D machine vision technologies resides in scanning objects in motion and the ever-persistent trade-off between quality and speed.

But why is this task such a great challenge?

To get a whole 3D snapshot of a scene, some methods require the movement of the camera or the scanned object, while others do not.

The structured light method, for example, encodes 3D information directly in the scene by emitting one or more coded structured light patterns. However, to achieve high accuracy and resolution, structured light systems need to use multiple frames with different structured patterns so the scene needs to be static at the moment of acquisition. Otherwise, the 3D output would be distorted.

On the other hand, ToF area sensing systems are well suited for dynamic applications as they are very fast and provide nearly real-time processing. However, they fall short in their ability to deliver high resolution at moderate noise levels.

Compromising between quality and speed seemed to be an insuperable challenge as none of the existing 3D sensing methods has been able to overcome their limitations related to the acquisition process and data processing. This problem has been only recently resolved by the novel technology of Parallel Structured Light. How does the technology ultimately overcome the challenge of scanning in motion?

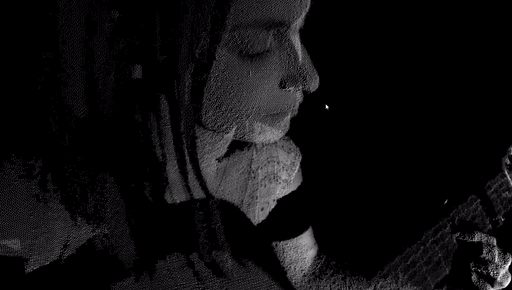

High-quality 3D reconstruction of a moving scene enabled by the Parallel Structured Light technology. Source: Photoneo

The “secret” behind Parallel Structured Light

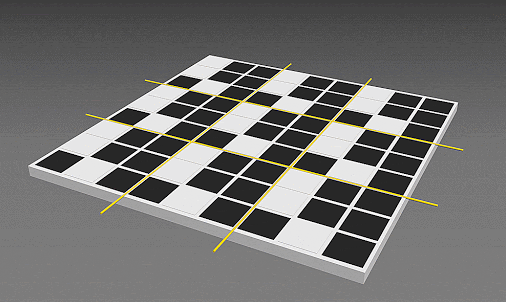

As the name suggests, the technology uses structured light in combination with a proprietary CMOS image sensor with a mosaic pixel pattern. As can be seen in the animation below, the sensor is divided into super-pixel blocks, which are further divided into subpixels.

Mosaic CMOS image sensor. Source: Photoneo

The laser coming from a projector is on the entire time, while the individual pixels are repeatedly turned on and off within a single exposure window - the pixel modulation in the sensor happens simultaneously, hence the name Parallel Structured Light.

And this is what makes the technology so revolutionary. It requires only a single shot of the scene to acquire multiple virtual images – so it so to say “freezes” the scene during the acquisition stage. The result is a high-quality 3D reconstruction of a moving scene without motion artifacts.

For comparison, conventional structured light systems require a scene to remain still during the acquisition process because they capture projector-encoded patterns sequentially.

The Parallel Structured Light technology thus overcomes the challenge of 3D scanning in motion, offering the high resolution of multiple-frame structured light systems and the speed of single-frame ToF systems.

The new range of applications with no limits

Because the Parallel Structured Light is the first and only technology that enables a high-quality 3D area scanning of objects in motion, it opens up completely new spheres for automation.

Standard robotic tasks such as bin picking, palletization, depalletization, object sorting, machine tending, quality control, inspection, metrology, non-destructive harvesting, and an infinite number of other applications can now be pushed to the next level. Until recently, their automation was limited to static scenes - now the Parallel Structured Light technology shifts the paradigm and enables the recognition, localization, and handling of objects in motion, immensely decreasing cycle times and latency.

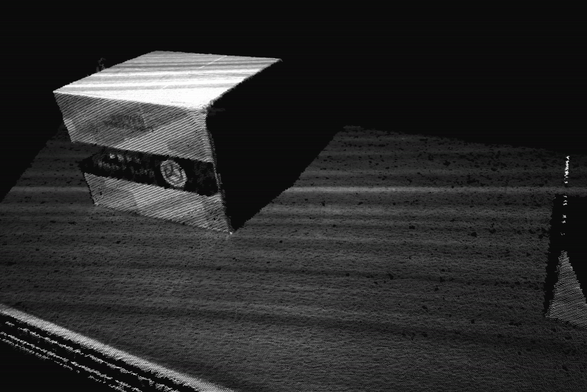

Scanning of boxes moving on a conveyor belt. Source: Photoneo

Thanks to this, the technology also greatly benefits hand-eye applications, where a 3D camera is directly attached to the robotic arm. While standard vision systems require the robot to stop its movement to trigger a scan, the Parallel Structured Light technology enables the capture of objects even while the robot moves.

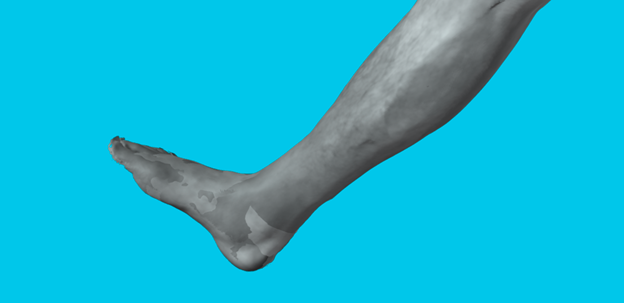

Scanning the human body or measuring the volume of shapeless and deformable moving objects - tasks that were unfeasible not such a long time ago are possible to do now. The Parallel Structured Light technology provides great versatility, uplifts automated lines, and enables tasks that were not possible to do with conventional robots or their programming, such as smarter cooperation of humans and robots in collaborative robotics, faster and more accurate picking, or unloading and loading of moving objects.

A scan of the human leg can be used for medical purposes. Source: Photoneo

The 3D camera powered by the Parallel Structured Light

The company behind the novel technology is Slovakia-based Photoneo, a developer of smart automation solutions that are based on a combination of outstanding, original 3D machine vision and robot intelligence.

The technology is implemented in their 3D camera MotionCam-3D, making the device the highest-resolution and highest-accuracy area-scan 3D camera especially suitable for dynamic scenes. The camera can capture objects moving up to 144 kilometers per hour, providing 20 fps and a point cloud resolution of up to 2 Mpx with a high level of 3D detail. An industrial-grade quality is ensured by thermal calibration and IP65 rating, thanks to which the camera is water and dust resistant and provides stable and reliable performance in various working conditions. Together with effective resistance to vibrations and high performance in demanding light settings, these features enable the camera to be implemented in challenging industrial environments.

.png)

MotionCam-3D powered by the Parallel Structured Light technology. Source: Photoneo

The five models of the camera cover a large scanning range to enable the capture of items as small as a cup of tea as well as objects of the size of a EUR-pallet. High versatility is ensured by three different scanning modes for various needs and application requirements - a mode for capturing dynamic scenes, another one for static scenes, and one for creating 2D images only.

The demand for smart automation solutions is more urgent than ever and increases across all industries. Logistics and e-commerce are only two of many examples of this trend. Advanced automation solutions come as a “silver bullet” to solve insufficient productivity and a shrinking labor force. The automation of picking, checking, and sorting of boxes, for instance, powered by a combination of powerful machine vision technology and robot intelligence helps boost productivity and efficiency, optimize processes, reduce costs, save time, and significantly eliminate the risk of injuries.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product